Power Data with Semantic Intelligence

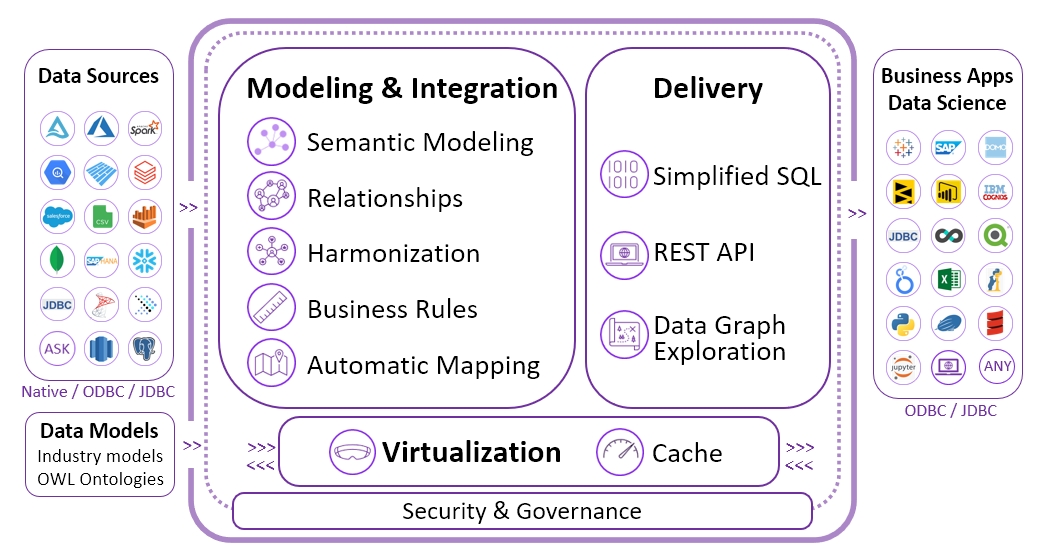

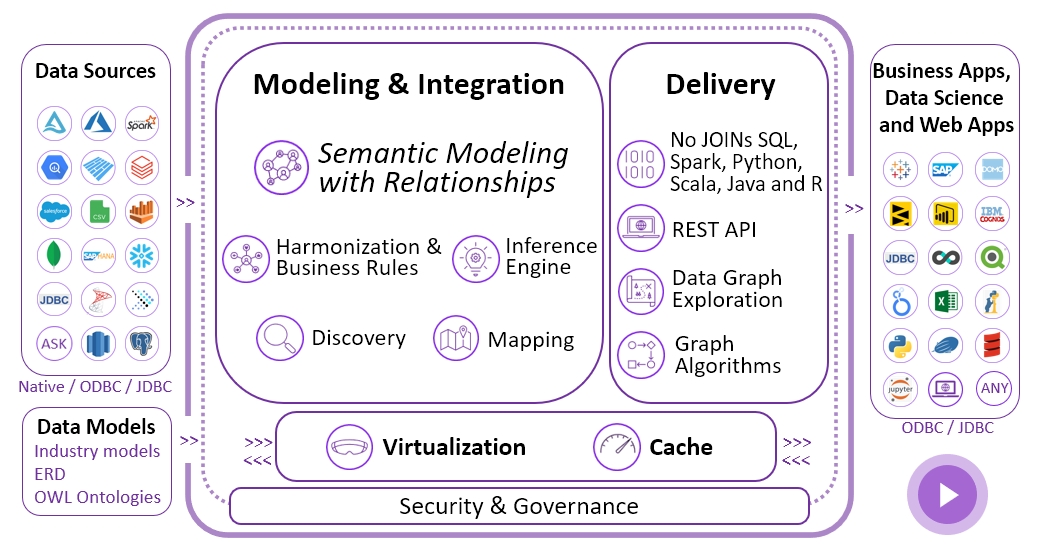

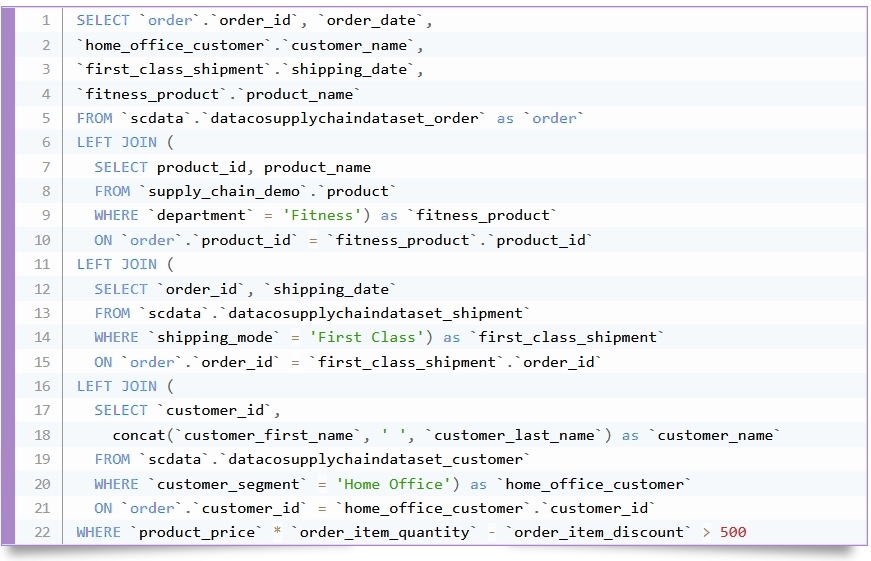

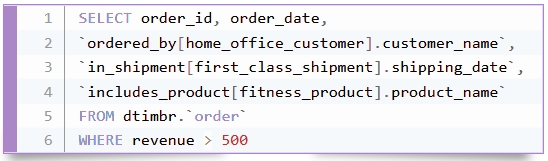

Model and integrate data with business meaning and relationships, unify metrics and accelerate delivery of data products with 90% shorter SQL queries and semantic REST API

“Timbr is the most powerful, yet simple to implement semantic platform for the SQL ecosystem”

Industry leaders use Timbr to connect data in a meaningful way:

Industry leaders use Timbr to connect data in a meaningful way:

Automate Modeling of Data to

Accelerate Consumption

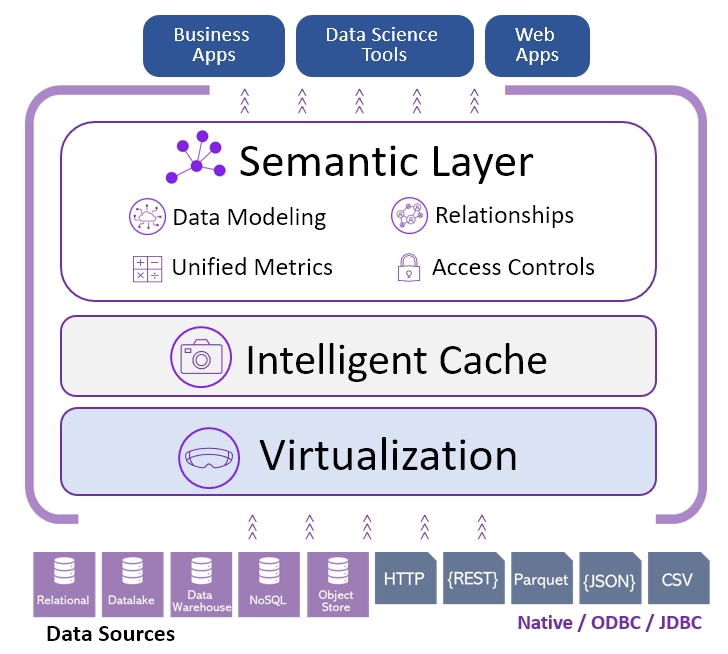

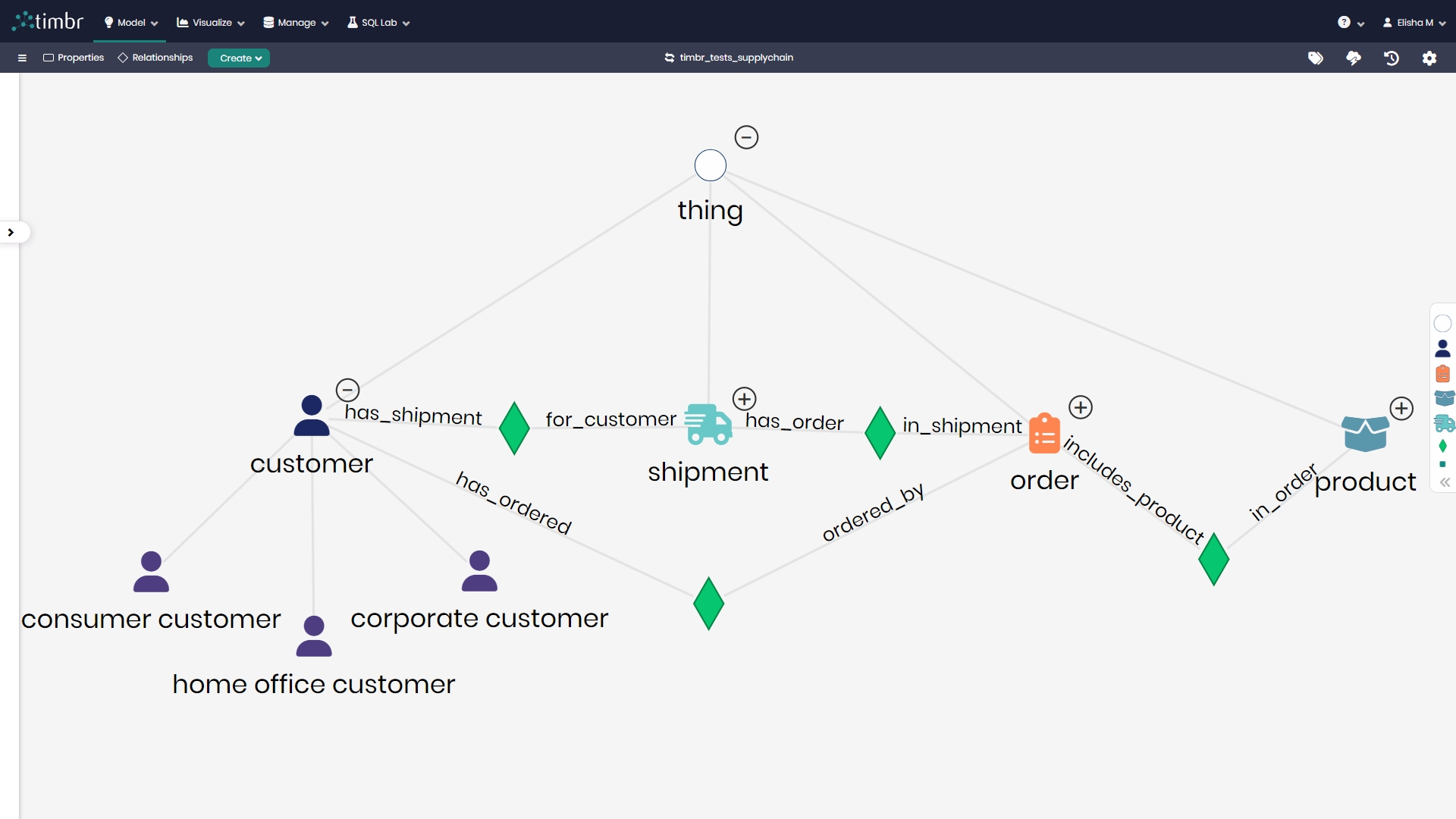

- Easily model data using business terms to give it common meaning and align business metrics

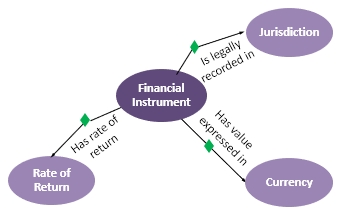

- Define sematic relationships that substitute JOINs so queries become much simpler

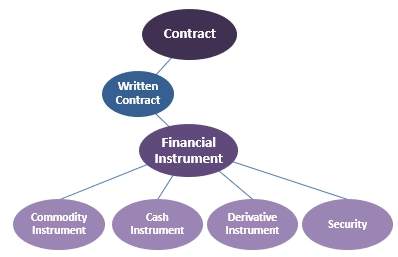

- Use hierarchies and classifications to better understand data

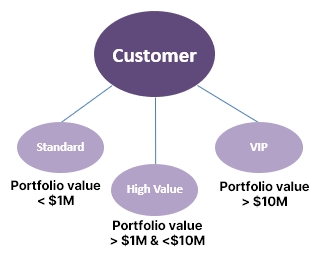

- Use SQL logic and math operators to filter data and define business rules

- Import industry data models and OWL ontologies to create semantic data models

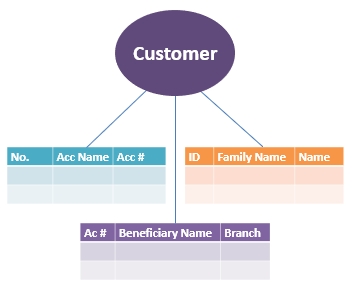

Semantic Representation

of Data

Semantic Relationships

Hierarchies and Classifications

Business Logic

Semantic Reasoning

Integrate Data

- Automatically map data to the semantic model

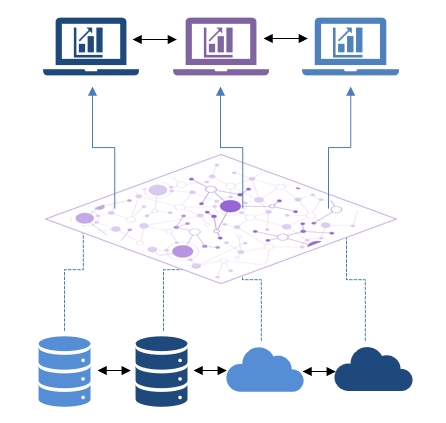

- Join multiple data sources with a powerful distributed SQL engine to query data at scale

- Consume data as a connected semantic graph

- Benefit from advanced query optimizations

- Boost performance and save compute costs with an intelligent cache engine and materialized views

Connect Any Data Source

Connect to most clouds, datalakes, data warehouses, databases and any file format. Timbr empowers you to work with your data sources seamlessly.

How you store your data is up to you. By default, nothing is stored in Timbr. When a query is run, Timbr optimizes the query and pushes it down to the backend.

Consume Data However You Need

Benefit From Short SQL Queries

Visually Explore Data as a Graph

- Traverse your data across tables and sources

- Identify relationships and dependencies

- Perform network analysis

Expose Data To All Consumers

Power Web Apps with Timbr's REST

- Simplified Data Access: Draw from multiple data sources

- Data Fetching: Request only the fields needed

- Data Nesting: Fetch nested data structures

- Semantic Swagger: Self-documentation (open API spec)

- Relationship Handling: Draw complex relationships in data simplified by the semantic graph

- Reduce Netwok Payload: Enjoy query optimization when data is nested and fetched in a single call

- Security: Role-based and row-level access control

Decouple Consumption from Data

- Benefit from reusable common metrics and naming conventions across BI tools, scripts, ML models and applications

- Update the underlying data structure without disrupting end-users and tools

- Push down compute from BI to CDW

- Avoid lock-in of data stores and consumption tools

- Accelerate cloud migration

- Build easier to maintain systems

Easy and Fast

Implementation

- Fully virtual, no ETLs required

- Low code UI or familiar SQL

-

JDBC/ODBC compliant, accessible

to any BI and Data Science tool

Choice of

Deployment Options

- SaaS, cloud or on-premises

- Available on Azure, GCP and AWS

- Containerized in Kubernetes

Tackle your data challenges

faster and with minimum effort

1 GARTNER Cool Vendors in Data Management, June 01, 2021. ID: G00746797.

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose. The GARTNER COOL VENDOR badge and GARTNER are registered trademarks and services marks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.

1 GARTNER Cool Vendors in Data Management, June 01, 2021. ID: G00746797.

Analysts: Philip Russom , Ehtisham Zaidi , Jason Medd , Eric Thoo , Robert Thanaraj. Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose. The GARTNER COOL VENDOR badge and GARTNER are registered trademarks and services marks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and are used herein with permission. All rights reserved.